“More than 80% of companies now use AI agents in production, but most do not have an adequate testing framework for this smart system.”

Consider a recent real world scenario where AI customer service reveals sensitive competitive information when users manipulate their instructions. The system that supports votes starts processing conversations outside the intended scope, increasing the issue of privacy and financial advisors produces wrong loan calculations that cause significant risk exposure to the institution.

Basic Challenge? AI agents can behave unexpectedly in the production environment, create security vulnerability, compliance risk, and financial accountability potential that failed to be detected by traditional testing methods.

Uncomfortable truth? No one knows how to test this AI agent properly.

But why do we need AI to test AI agents?

Bottlenecks Development Speed Manual Testing

The creation of traditional manual tests requires weeks of QA efforts for complex AI interactions, creating developmental congestion that delays the time-market and exponentially increases costs when the team struggles with the impossibility of mathematical variations of unlimited AI responses manually.

Inadequate test coverage shows critical vulnerability

The human testing team can only make a small part of the required test scenario, leaving AI agents to achieve production with significant validation gaps that results in unexpected failures, security violations, and degradation of user experiences that damage brand reputation and user trust.

The extended feedback cycle hinders competitive advantage

Traditional testing workflow that takes days or weeks to validate AI changes creates long feedback loops that limit the ability of iteration rapidly, causing the organization to lose competitive advantage when market opportunities pass while they are waiting for completion testing.

Quality risk produces operational obligations

Without comprehensive testing, AI agents show unpredictable production behavior that leads to customer service failures, misunderstanding of wrong information, and system reliability problems that result in increasing support costs, customer churn, regulatory compliance problems, and potential financial obligations.

Resource constraints of compromise quality testing

The complexity and scope of testing AI agents requires significant human resources which become expensive on a scale on a scale, forcing the organization to compromise the quality of validation due to budget constraints and staffing, making the critical system not enough to be tested.

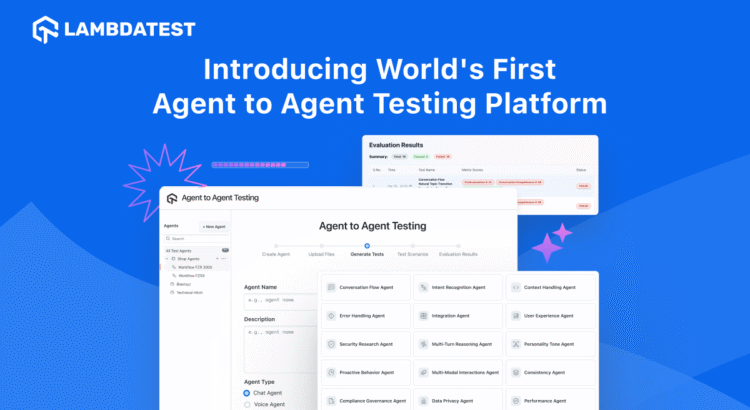

Introducing the world’s first agent testing platform

Lambdatest agent to the agent testing platform is the first complete solution of the industry that was built specifically to test the AI system. We use AI agents to test other AI agents, create an intelligent test approach that can handle the complexity of modern AI systems.

Instead of trying to predict manually every way that might interact with users with your AI, our platform changes the way the test is completely. Smart testing agents automatically explore, test, and validate your AI system by running thousands of different scenarios and challenges, providing comprehensive testing that grows with your AI complexity.

This new approach solves a critical problem: how to test the system correctly think and adapt. Our testing agents study and adjust their testing methods to match how you behave in real situations, giving you a complete test scope that cannot be provided by traditional testing methods.

Notes

NotesSend AI Agents Without Defects with Agents to Agent Testing Platforms! Register for Beta now!

Autonomous Test Generation on Scale

The multi-agent testing system we think as your user does, but faster, more comprehensive, and with patience to try thousands of edge cases. Whether you validate chatbots, voice assistants, or complex agent workflows, our platforms:

- Produce thousands of various test scenarios that are automaticallyfind critical bugs and security gaps passed by human examiners

- Adapt to your agent’s behavior patternsFound a dead end of conversation and logical inconsistencies in real-time

- Reach a leap 5-10x in the scope of the test compared to the traditional approach

- Test all types of interactions such as text, sound, and spread of hybrids with the same sophistication

Not only this, you can also validate your AI agent throughout the text, sound, or hybrid interaction, including a variety of cases and security gaps while ensuring consistent flow, intention, tone, and reasoning, with further examinations such as risk assessment and validation of behavior outside of traditional methods.

True Multi-Modal Understanding

Your requirements are not living in one format, and your testing is also not allowed. Beyond text! Our-agent-agen-to-agent testing platforms easily understand the context of:

- Pdf and documentation

- Confluence and Internal Wiki Pages

- API documentation and technical specifications

- Pictures, audio, and video content

- Behavior and log life system

This multi-modal context means that our testing agent understands not only what your system is doing, but what should be done. The test is not only comprehensive, they are targeted intelligently for your actual business requirements, ensuring more accurate, relevant, and impact validation.

Automatic multi-agent test generation

Instead of trying to predict manually every way that might interact with users with your AI, our platform changes the way the test is completely. Utilizing the AI agent team specifically to produce a variety of test scenarios and is rich in context, creating a series of high -quality tests that reflect real world interactions and edge conditions.

Smart testing agents automatically explore, test, and validate your AI system by running thousands of different scenarios and challenges, providing comprehensive testing that grows with your AI complexity. AI AI AI and Genai ensure that the test cases are more varied and driven by experts compared to the single general destination agent.

Comprehensive test scenario

Produce a comprehensive test scenario in various categories, ensuring the overall validation of your AI conversation system and application. The test scenario is automatically produced in various categories such as:

- Validation of intention recognition

- Conversation flow test with several validation criteria

- Security vulnerability assessment

- Behavioral consistency examination

- EDGE case exploration

Our testing agents study and adjust their testing methods to match how you behave in real situations, giving you a complete test scope that cannot be provided by traditional testing methods.

Smooth integration with hyperexecution

We have integrated with the strict platform of our-agent-agent testing with Lambdatest hyperexecution infrastructure for the execution of parallel clouds on a large scale. After the test scenario is produced:

- Run thousands of tests simultaneously opposite our cloud infrastructure

- Switching from ideas to feedback that can be followed up in minutesnot a day or week

- Scale of Testing Efforts Without Scaling Your Team or infrastructure

- Integrated smoothly with your existing ci/CD pipe

Produce the test scenario and run it on a scale with a minimum arrangement, provide feedback that can be followed up faster than before.

Insights that can be followed up

Assess the test results with adjusted response schemes or sample output for clear and categorized insight. Create data -based decisions about agent performance and optimization by evaluating key metrics such as:

- Detection and bias mitigation

- Completeness of responses

- Identification of hallucinations

- Behavioral consistency

- Security vulnerability assessment

Ensure relevance, accuracy, and efficiency in all your AI agents’ distribution with comprehensive performance analysis.

First driving advantage

Lambdatest agents to agent testing platforms are not just new products, this is the creation of a totally new category. We do not increase existing tests; We created the test methodology needed by the first world.

While your competitors are still looking for ways to validate their AI agents manually (or worse, do not validate them at all), you can use a system that has been tested thoroughly by smart agents specifically designed for this purpose.

Game Center

Game News

Review Film

Rumus Matematika

Anime Batch

Berita Terkini

Berita Terkini

Berita Terkini

Berita Terkini

review anime